- Get link

- X

- Other Apps

Almost every web application wants a search functionality on their website. And preferably one where you have things like correcting spelling errors and order by relevance. This seems like very simple for an enduser, but for a developer making a good site search functionality is hard.

3rd party web services

A simple solution would be using a web service. A web service can scrape your own site. You are however limited to only public pages and there is very little control in search results. For example if you don't pay for Google Custom Search for example, you will end up with Adwords of your competitors showing up in the search results on your site. For most websites that require authentication these tools are useless.

Elasticsearch

ElasticSearch is the most common service used to index applications. You do however need to write your own code to index a record. For example you can log it like this:

{

"resource": "user",

{

"resource": "user",

"id": "1214fwfwqfqw"

"indexes": ["Mr.", "Rowan", "Atkinson", "active"]

}

A PHP application could ask ElasticSearch to find everything with index "Rowan" in order of relevance and then let the application do a database query to retrieve the actual data.

It's especially good at indexing large quantity of data and implementing fuzzy search (so handling typing errors), ElasticSearch is however not without faults. The initial load to run ElasticSearch makes ElasticSearch a bit overkill for small datasets where you only have like around 1000 records. You also need to store data in 2 places for searching(in ElasticSearch and in the database) and combine the data from both sources. In small datasets it would be better to just let the database handle this.

Search in database

In case you want to do a text search with a database only you can make a large query where you compare every text with every relevant database column. For example if I do a text search for "Rowan Atkinson" on User I could end up with a query like this:

SELECT *

FROM user

WHERE email LIKE '%rowan%'

OR email LIKE '%atkinson%'

OR firstName LIKE '%rowan%'

OR firstName LIKE '%atkinson%'

OR lastName LIKE '%rowan%'

OR lastName LIKE '%atkinson%'In case I want to order this by relevance, the only solution would be something like this:

SELECT *

FROM user

WHERE email LIKE '%rowan%'

OR email LIKE '%atkinson%'

OR firstName LIKE '%rowan%'

OR firstName LIKE '%atkinson%'

OR lastName LIKE '%rowan%'

OR lastName LIKE '%atkinson%'

ORDER BY (

IF(email LIKE '%rowan%', 1, 0)

+ IF(email LIKE '%atkinson%', 1, 0)

+ IF(firstName LIKE '%rowan%', 1, 0)

+ IF(firstName LIKE '%atkinson%', 1, 0)

+ IF(lastName LIKE '%rowan%', 1, 0)

+ IF(lastName LIKE '%atkinson%', 1, 0)

) DESCAs you can see, it can be done, but this results in a very unmaintainable query and the relevance ordering is also a bit too limited. We also need to add an index to all these columns. You could make the query smaller if you use regular expressions, but it will actually perform worse on a large datasets and have to deal with client input sanitization to avoid DDOS attacks on your server.

SELECT *

FROM user

WHERE email REGEXP '(rowan|atkinson)'

OR firstName REGEXP '(rowan|atkinson)'

OR lastName REGEXP '(rowan|atkinson)'

We could create a full text search index if the database supports this, but the problem of full text search queries is that they are database vendor specific and also only support a few column types. Also full text search does not care about the contents type, so if a colum contains HTML tags, you could search on the html tags with the full text search. We would need to store all our search terms as a string for a more optimized text search to start with.

A simple solution would be to store the search indexes in a separate database table and count the number of words found. This is a how a tf (Term Frequency) search works:

Creating an indexer service

You would need some indexer service that counts all words used in some entity. As I have shown in a previous article I made a simple composer package that counts words. You can write your own indexer like this:

use Apie\CountWords\CountWords;

/**

* @return array<string, int>

*/

function getIndexesForUser(User $user): array {

$counts = [];

$counts[$user->getId()] = 1;

$counts = CountWords::countFromString($user->getEmail(), $counts);

$counts = CountWords::countFromString($user->getFirstName(), $counts);

$counts = CountWords::countFromString($user->getLastName(), $counts);

return $counts;

}

In my Apie library there is an indexer service that you can use with simple defaults or configure entirely yourself with custom IndexingStrategyInterface implementation:

$indexer = new \Apie\Core\Indexing\Indexer(

new FromEnum(),

new SkipPasswordFields(),

new FromGetters()

);

$indexer = \Apie\Core\Indexing\Indexer::create(); // simple indexer instantiation.

$indexList = $indexer->getIndexesFor($user, new ApieContext());

It depends a bit what ORM you are using to store the indexes in the database and I want to keep it out of the scope for this article.

Database example with search index table

So what did we gain from this? We can now add our own searching terms which may be derived from the entity. For example if we would index the createdAt we could add an index on what day of the week the user was created. We can now also deal with different column types (html, plain text, integer, floating points, dates). We also count the number of times a word is mentioned over multiple fields, which was not possible in our old query.

The only downside is that if we change what we index in the code, we may need to manually recalculate all search indexes for all entities in the database. However it is very much possible to do this in a background job and an end user might not even notice this.

So how does our query look like now? Let's see:

SELECT DISTINCT user.*

FROM user u

JOIN indexes idx ON (idx.user_id = u.id)

WHERE idx.text = 'rowan'

OR idx.text = 'atkinson'

GROUP BY user.id

ORDER BY SUM(idx.count) DESCNow we show relevance as in how many times all words are being used. We now made a TF (term frequency) search. If your SQL server supports full text search, you could also rewrite it to use full text search instead. While it does work to some extent, the problem is that very common words like 'the' will result in irrelevant search results, because almost every text contains the word 'the'.

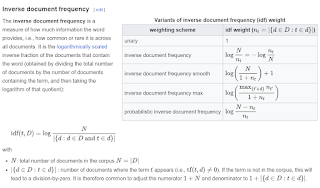

IDF: Inverse document frequency.

IDF stands for 'inverse document frequency' and basically means I get a higher value if only one document knows the search term and get a value closer to 0 if it is used by almost every document. In this case a 'document' would be a (potential) search result.

A quick glance on Wikipedia about IDF looks intimidating with all the mathematical formulas (source: https://en.wikipedia.org/wiki/Tf%E2%80%93idf):

To start with N is the number of documents. In SQL that would be just counting the number of distinct user_id we can find in our indexes table:

SELECT COUNT(DISTINCT indexes.user_id)

FROM indexes;The bottom part is the same thing except we now count the number of documents with a specific search term.

SELECT COUNT(DISTINCT indexes.user_id)

FROM indexes

WHERE indexes.text = 'rowan';So the mathematical formula can be done in SQL very easily:

LOG(

SELECT COUNT(DISTINCT indexes.user_id) FROM indexes

/

SELECT COUNT(DISTINCT indexes.user_id) FROM indexes WHERE indexes.text = 'rowan'

)

Side note: the LOG() function in SQL is not available in the Sqlite code of PHP. You could add it with PDO::createSqlitefunction or remove the LOG() from the query to be faster, but less accurate.

As you can see: if we have a nice index table we can easily calculate the idf and the tf per search term. So how would our query look like if we would look for "Rowan Atkinson"?

SELECT DISTINCT user.*

FROM user u

JOIN indexes idx ON (idx.user_id = u.id)

WHERE idx.text = 'rowan'

OR idx.text = 'atkinson'

GROUP BY user.id

ORDER BY SUM(

idx.count

*

LOG(

SELECT COUNT(DISTINCT indexes.user_id) FROM indexes

/

SELECT COUNT(DISTINCT indexes.user_id) FROM indexes idx2 WHERE idx2.text = idx.text

)

) DESCSee? The mathematical formula was not so hard when you write in a sensible language like SQL.

IDF Optimizations

The formula above would technically work, but it is also very inefficient in retrieving. The reason is that for every text search we recalculate the IDF over all indexes we find. If we search over some entity that does not change often, this is very inefficient as we keep recalculating the same value over and over again. We could simple store the idf in the indexes to speed up the query. Doing so removes the subquery:

SELECT DISTINCT user.*

FROM user u

JOIN indexes idx ON (idx.user_id = u.id)

WHERE idx.text = 'rowan'

OR idx.text = 'atkinson'

GROUP BY user.id

ORDER BY SUM(idx.count * idx.idf) DESCThe downside is that we need to recalculate the idf for all records on every insert, but it outweighs the performance gain you get on searching for data. You could also do a partial update on only a few search terms and do a full update once in a while instead as an enduser will never see these indexes.

Search over multiple database tables

In case you want to do a full text search on all resources the indexing table becomes a bit bigger. A novice Laravel developer might think to use a morph to relation, but this is a bad idea because of the lack of performance and we need performance on large datasets to stay scalable. It's better to create a new column for every relation and make it nullable.

It would become something like this:

As you see in my example I did not link OrderLine with SearchTerm as you should search for orders instead and OrderLine is just a table used by Order.

Fuzzy search

Fuzzy search is that you will still get results even when the user enters a typo error. Though I have not implemented it yet, fuzzy search is doable, but rather complicated. Mysql has a Levehnstein distance method that calculates the similarity between two words, but comparing all words in a indexes table with a search term can not be optimized a lot in SQL.

You could first look up all words that have no search term and do a second query to find similar words, for example if I looked up "Rowa Akinson":

WITH string_array AS (

SELECT 'Rowa' AS text

UNION ALL

SELECT 'Akinson' AS text

)

SELECT sa.text

FROM string_array sa

LEFT JOIN indexes si ON sa.text = si.text

WHERE si.text IS NULL;

SELECT *

FROM indexes idx

WHERE levehnstein(idx.text, 'Rowa') < 2

OR levehnstein(idx.text, 'Akinson') < 2

Other solutions would all be related to making index tables of your index tables or writing a custom Levehnstein method that exits early if the distance is too large for speed up reasons. Vectorizing text could also work, so a search term becomes a set of numbers could also help, but this is PHD level of working with text in SQL.

In case you need fuzzy search I'd advise to stick to ElasticSearch. There are workarounds in case you do want it in SQL, but SQL is not made for it.

My Apie library text search

My apie library does a simple version of TF/IDF in query to do text search and the same indexes are used for column searches with a simple LIKE query. Internally it recalculates the idf value in a column to speed up searching (at the cost of insertion/update time).

The cool side is that as a programmer you do not need to worry about how TF/IDF works as Apie does this on the background. I can add fulltext search functionality later and as a programmer I just need to update my apie library.

I'm now working on integrating uploaded files in Apie and I'd love being able to index uploaded files so you can search for file contents using the simple full text search on an entity.

I know it's often a very much wanted feature on a custom website to add a full text search, but customers often discard it when they see how much effort it would cost to get a rich fulltext search on their website.

Nontheless it might also be beneficial that adding a simple search does not automatically require ElasticSearch as a database is really powerful in finding stuff too.

Comments

Post a Comment